Thai2Vec Embeddings Examples

We use the embeddings from v0.1 since it was trained specifically for word2vec as opposed to latter versions which garner to classification. The thai2vec.bin 51,556 word embeddings of 300 dimensions, in descending order by their frequencies (See thai2vec.vocab). The files are in word2vec format readable by gensim. Most common applications include word vector visualization, word arithmetic, word grouping, cosine similarity and sentence or document vectors. For sample code, see

thwiki_lm/word2vec_examples.ipynb.

Imports

[ ]:

# #uncomment if you are running from google colab

# !pip install sklearn_crfsuite

# !pip install https://github.com/PyThaiNLP/pythainlp/archive/dev.zip

# !pip install fastai==1.0.46

# !pip install emoji

[2]:

%reload_ext autoreload

%autoreload 2

%matplotlib inline

from pythainlp.tokenize import word_tokenize

from gensim.models import KeyedVectors

import numpy as np

from sklearn.manifold import TSNE

import matplotlib.pyplot as plt

import matplotlib.font_manager as fm

import dill as pickle

import pandas as pd

model_path = './'

[3]:

#load into gensim

# model = KeyedVectors.load_word2vec_format(f'{model_path}thai2vec.bin',binary=True)

from pythainlp import word_vector

model = word_vector.WordVector(model_name="thai2fit_wv").get_model() # load thai2fit_wv from pythainlp

Corpus: thai2fit_wv

- Downloading: thai2fit_wv 0.1

[4]:

#create dataframe

thai2dict = {}

for word in model.index2word:

thai2dict[word] = model[word]

thai2vec = pd.DataFrame.from_dict(thai2dict,orient='index')

thai2vec.head(10)

[4]:

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 290 | 291 | 292 | 293 | 294 | 295 | 296 | 297 | 298 | 299 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ที่ | 0.308956 | -0.097699 | 0.116745 | 0.215612 | 0.015768 | -0.064163 | 0.062168 | 0.039649 | 0.864940 | 0.846904 | ... | -0.142418 | 0.033241 | 0.171581 | -0.624864 | -0.009358 | 0.449131 | 0.120130 | -0.122195 | -0.450617 | -0.071318 |

| และ | 0.010751 | -0.618971 | 0.129665 | 0.035460 | -0.007560 | 0.027607 | 0.397824 | 0.026543 | 0.254075 | 0.168328 | ... | -0.105786 | 0.180930 | -0.101630 | 0.070885 | -0.037263 | 0.183606 | -0.049088 | -0.672288 | -1.293044 | 0.592576 |

| เป็น | -0.015736 | -0.258926 | 0.052953 | 0.153728 | -0.005985 | -0.021081 | 0.041088 | 0.057312 | 1.633230 | 0.442729 | ... | -0.009408 | -0.252576 | -0.305512 | 0.372542 | 0.049151 | 0.568470 | 0.266586 | 0.400800 | -0.784650 | 0.197369 |

| ของ | -0.189711 | -0.174774 | 0.171124 | -0.186771 | 0.054294 | -0.114150 | -1.109456 | -0.094466 | -0.447015 | 0.042377 | ... | -0.168676 | -0.148738 | 0.680404 | 0.097702 | 0.020270 | 0.182967 | -0.083949 | 0.006287 | -0.707434 | -0.070234 |

| มี | -0.156962 | -0.231863 | 0.080312 | 0.323157 | 0.215695 | 0.055145 | 0.420794 | 0.016842 | 0.256759 | 0.832864 | ... | -0.044267 | -0.147186 | -0.105424 | 0.907078 | 0.009299 | 0.550953 | 0.139337 | 0.031696 | -0.670379 | -0.008048 |

| ได้ | -0.428813 | -0.031194 | 0.041922 | -0.036608 | -0.008106 | 0.076470 | -0.782270 | 0.033361 | 0.606864 | 0.440520 | ... | 0.024458 | -0.025031 | 0.103389 | -0.078255 | 0.034323 | 0.459774 | -0.748643 | 0.337775 | -0.487408 | -0.511535 |

| """" | -0.287710 | 0.064193 | 0.205076 | 0.146356 | -0.071343 | -0.039451 | -1.845461 | 0.163763 | 1.018096 | 0.272786 | ... | 0.051024 | -0.532856 | -0.131856 | -0.090323 | -0.058895 | 0.151262 | -0.420358 | 0.055971 | -0.930814 | 0.163908 |

| การ | 0.239587 | -0.303620 | 0.079953 | -0.453045 | -0.528826 | -0.161692 | 0.235725 | -0.099673 | 0.691668 | 0.536159 | ... | -0.110436 | -0.297495 | -0.217414 | 0.045158 | 0.066647 | 0.190095 | -0.304333 | -0.724927 | -0.995488 | -0.716609 |

| ( | -0.120522 | -0.355783 | 0.168180 | -0.377733 | -0.158624 | -0.047249 | 0.361140 | 0.161460 | 0.913314 | 0.345037 | ... | 0.116285 | -0.318218 | -0.356664 | 0.519889 | 0.130475 | 0.125772 | 0.101328 | -0.382658 | -1.205359 | 0.340139 |

| ) | -0.086848 | -0.155231 | 0.133015 | -0.039913 | 0.183761 | 0.115142 | -1.940854 | -0.066565 | -2.399744 | 0.146722 | ... | 0.019406 | -0.181474 | 0.099863 | 0.516092 | 0.201697 | 0.249139 | 0.252957 | 1.138815 | -0.018209 | 0.232265 |

10 rows × 300 columns

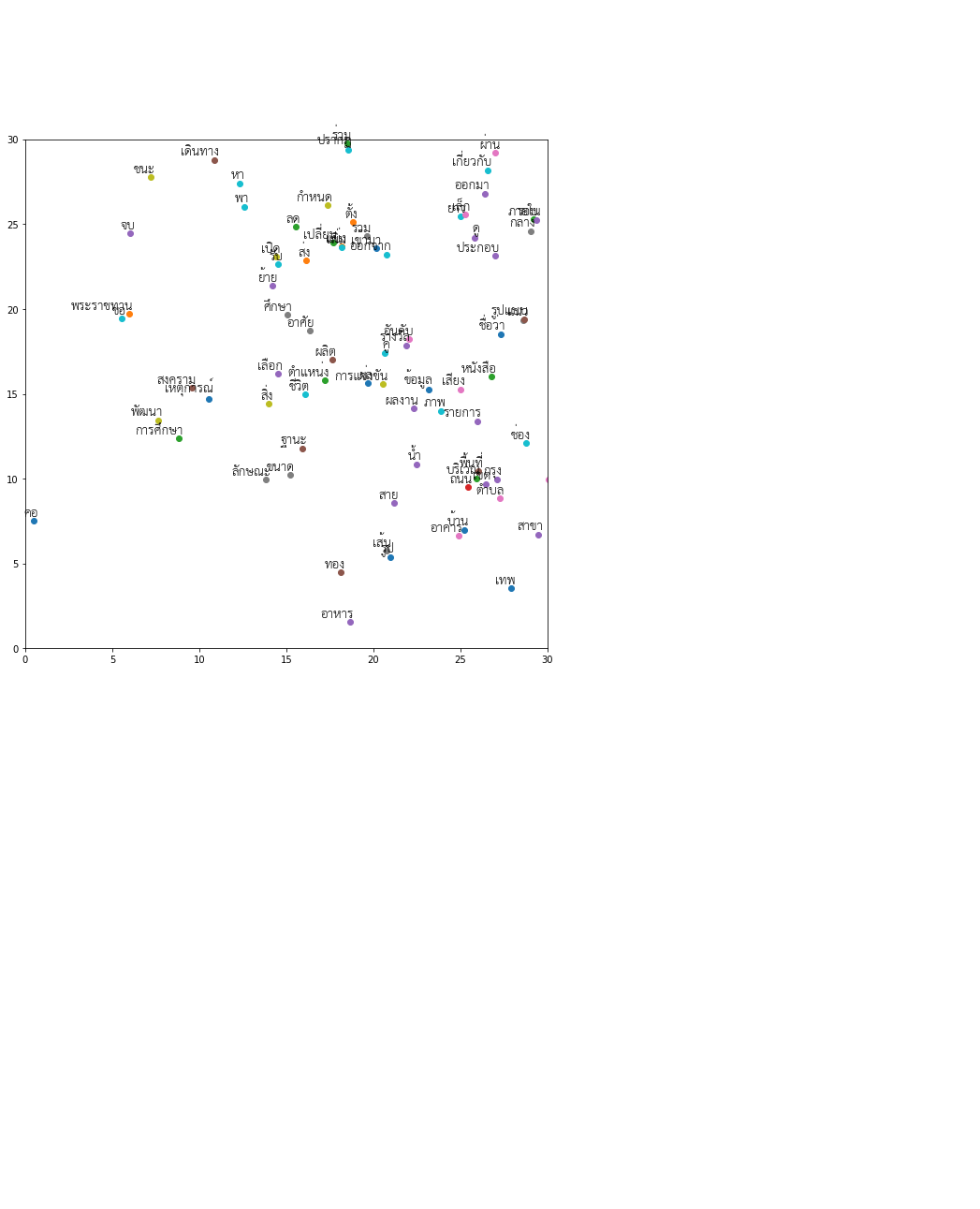

Using t-SNE, we can compress the 300 dimensions of each word into a 2D plane and plot their relationships.

[ ]:

!wget https://github.com/PyThaiNLP/tutorials/releases/download/thai2plot/thai2plot.pkl

[ ]:

labels = model.index2word

# #tnse

# tsne = TSNE(n_components=2, init='pca', n_iter=1000)

# thai2plot = tsne.fit_transform(thai2vec)

# pickle.dump(thai2plot,open(f'{model_path}thai2plot.pkl','wb'))

thai2plot = pickle.load(open(f'{model_path}thai2plot.pkl','rb'))

[7]:

labels[:10]

[7]:

['ที่', 'และ', 'เป็น', 'ของ', 'มี', 'ได้', '""""', 'การ', '(', ')']

[10]:

!wget https://github.com/jeffmcneill/thai-font-collection/raw/master/downloadable-free-thai-fonts/dip-sipa/TH-Sarabun-New-Regular.ttf

--2022-12-17 16:26:35-- https://github.com/jeffmcneill/thai-font-collection/raw/master/downloadable-free-thai-fonts/dip-sipa/TH-Sarabun-New-Regular.ttf

Resolving github.com (github.com)... 140.82.114.4

Connecting to github.com (github.com)|140.82.114.4|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://raw.githubusercontent.com/jeffmcneill/thai-font-collection/master/downloadable-free-thai-fonts/dip-sipa/TH-Sarabun-New-Regular.ttf [following]

--2022-12-17 16:26:35-- https://raw.githubusercontent.com/jeffmcneill/thai-font-collection/master/downloadable-free-thai-fonts/dip-sipa/TH-Sarabun-New-Regular.ttf

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.111.133, 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 479628 (468K) [application/octet-stream]

Saving to: ‘TH-Sarabun-New-Regular.ttf’

TH-Sarabun-New-Regu 100%[===================>] 468.39K --.-KB/s in 0.04s

2022-12-17 16:26:36 (12.6 MB/s) - ‘TH-Sarabun-New-Regular.ttf’ saved [479628/479628]

[11]:

#stolen from https://blog.manash.me/how-to-use-pre-trained-word-vectors-from-facebooks-fasttext-a71e6d55f27

def plot_with_labels(low_dim_embs, labels, filename=None, figsize=(10,10),

axis_lims = None):

assert low_dim_embs.shape[0] >= len(labels), "More labels than embeddings"

plt.figure(figsize=figsize) # in inches

for i, label in enumerate(labels):

x, y = low_dim_embs[i, :]

plt.scatter(x, y)

prop = fm.FontProperties(fname=f'TH-Sarabun-New-Regular.ttf',size=20)

plt.annotate(label,

fontproperties=prop,

xy=(x, y),

xytext=(5, 2),

textcoords='offset points',

ha='right',

va='bottom')

if axis_lims is not None: plt.axis(axis_lims)

if filename: plt.savefig(filename)

plot_with_labels(thai2plot[200:500],labels[200:500],axis_lims = [0,30,0,30])

Word Arithmetic

You can do simple “arithmetic” with words based on the word vectors such as: * ผู้หญิง + พระราชา - ผู้ชาย = พระราชินี * นายกรัฐมนตรี - อำนาจ = ประธานาธิบดี * กิ้งก่า + โบราณ = ไดโนเสาร์

[12]:

#word arithmetic

model.most_similar_cosmul(positive=['พระราชา','ผู้หญิง'], negative=['ผู้ชาย'])

[12]:

[('พระราชินี', 0.7954867482185364),

('กษัตริย์', 0.7382755279541016),

('พระเจ้า', 0.7046602368354797),

('เจ้าชาย', 0.6979373097419739),

('พระมหากษัตริย์', 0.6972416639328003),

('เจ้าฟ้าหญิง', 0.6871017217636108),

('พระเจ้าแผ่นดิน', 0.6827988624572754),

('พระพุทธเจ้า', 0.671796977519989),

('มกุฎราชกุมาร', 0.6711805462837219),

('นายพล', 0.6694187521934509)]

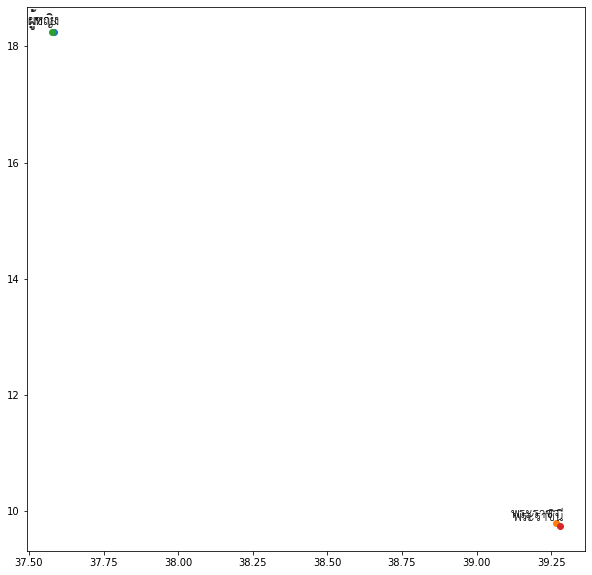

[13]:

sample_words = ['ผู้หญิง','พระราชา','ผู้ชาย','พระราชินี']

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

[14]:

model.most_similar_cosmul(positive=['นายกรัฐมนตรี'],negative=['อำนาจ'])

[14]:

[('รองนายกรัฐมนตรี', 1.4945054054260254),

('รัฐมนตรี', 1.400755763053894),

('ประธานาธิบดี', 1.3626699447631836),

('พันเอก', 1.3437265157699585),

('ผู้บัญชาการทหารบก', 1.3405414819717407),

('ผู้กำกับภาพยนตร์', 1.3339321613311768),

('นักฟุตบอล', 1.331659197807312),

('เอกอัครราชทูต', 1.3306005001068115),

('แห้ง', 1.3243674039840698),

('สุภาพสตรี', 1.3231494426727295)]

[15]:

sample_words = ['นายกรัฐมนตรี','อำนาจ','รองนายกรัฐมนตรี']

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

[16]:

#word arithmetic

model.most_similar_cosmul(positive=['สัตว์','พืช'], negative=[])

[16]:

[('สิ่งมีชีวิต', 0.537461519241333),

('สัตว์เลี้ยงลูกด้วยนม', 0.5080005526542664),

('แมลง', 0.5048903226852417),

('ผลไม้', 0.4839756190776825),

('มนุษย์', 0.47641509771347046),

('ผัก', 0.46431201696395874),

('สัตว์น้ำ', 0.45941096544265747),

('ต้นไม้', 0.45185261964797974),

('พันธุ์ไม้', 0.4504697620868683),

('ไม้ยืนต้น', 0.44425833225250244)]

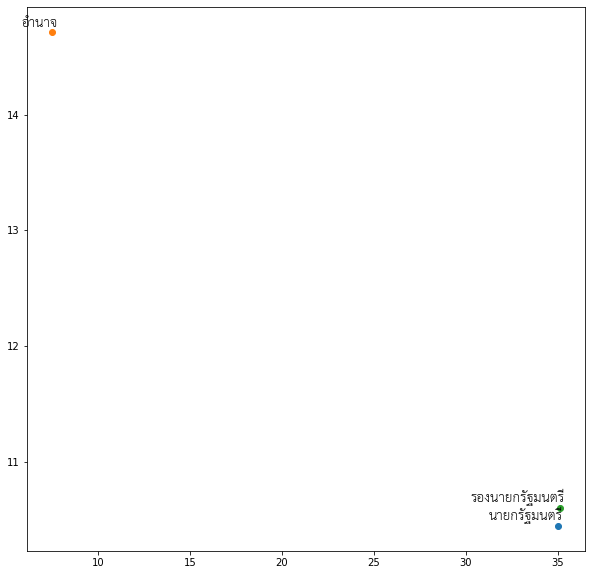

[17]:

sample_words = ['สัตว์','พืช','สิ่งมีชีวิต']

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

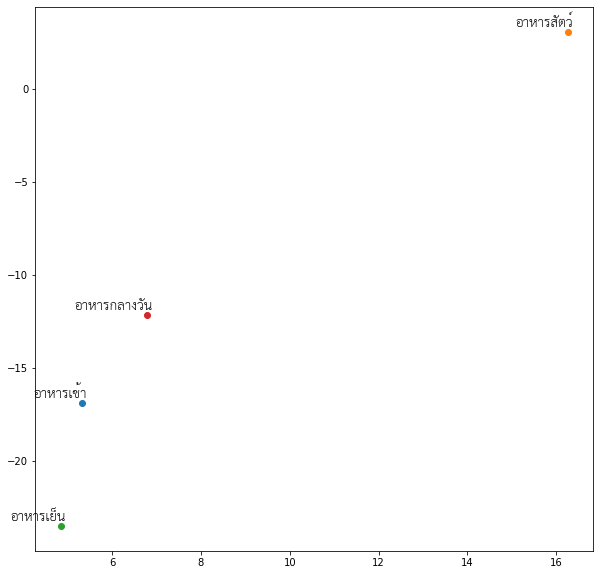

Doesn’t Match

It can also be used to do word groupings. For instance: * อาหารเช้า อาหารสัตว์ อาหารเย็น อาหารกลางวัน - อาหารสัตว์ is type of food whereas others are meals in the day * ลาก ดึง ดูด ดัน - ดัน is pushing while the rest is pulling. * กด กัด กิน เคี้ยว - กด is not verbs for the eating process Note that this could be relying on a different “take” than you would expect. For example, you could have answered ลูกเขย in the second example because it is the one associated with male gender.

[18]:

model.doesnt_match("อาหารเช้า อาหารสัตว์ อาหารเย็น อาหารกลางวัน".split())

/usr/local/lib/python3.8/dist-packages/gensim/models/keyedvectors.py:895: FutureWarning: arrays to stack must be passed as a "sequence" type such as list or tuple. Support for non-sequence iterables such as generators is deprecated as of NumPy 1.16 and will raise an error in the future.

vectors = vstack(self.word_vec(word, use_norm=True) for word in used_words).astype(REAL)

[18]:

'อาหารสัตว์'

[19]:

sample_words = "อาหารเช้า อาหารสัตว์ อาหารเย็น อาหารกลางวัน".split()

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

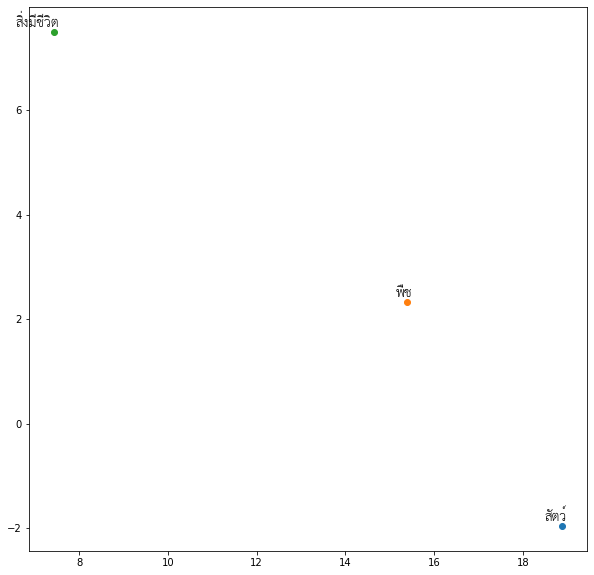

[20]:

model.doesnt_match("ลาก ดึง ดูด ดัน".split())

[20]:

'ดัน'

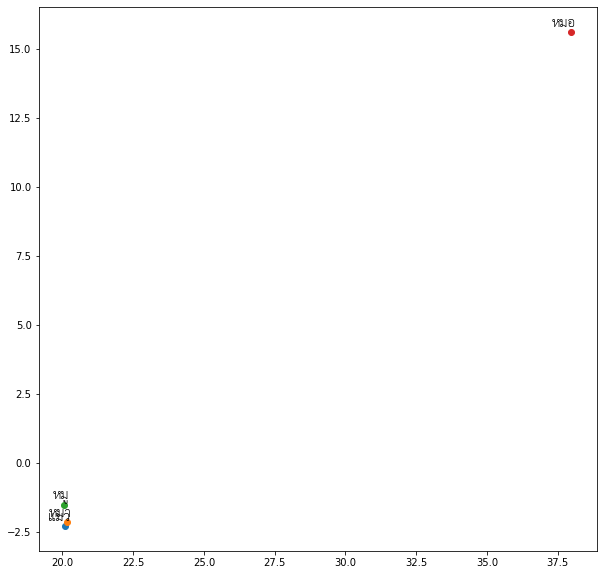

[21]:

sample_words = "ลาก ดึง ดูด ดัน".split()

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

[22]:

model.doesnt_match("แมว หมา หมู หมอ".split())

[22]:

'หมอ'

[23]:

sample_words = "แมว หมา หมู หมอ".split()

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

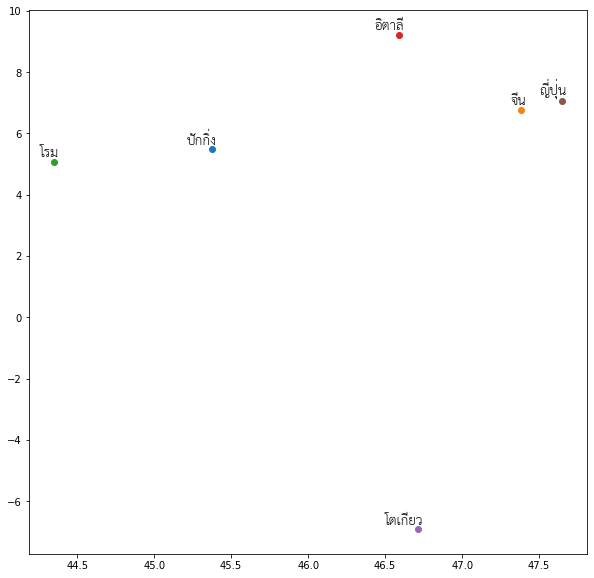

Cosine Similarity

[24]:

print('China + Beijing:', model.similarity('ปักกิ่ง', 'จีน'))

print('Italy + Rome:', model.similarity('โรม','อิตาลี'))

print('Beijing + Rome:', model.similarity('โรม', 'ปักกิ่ง'))

print('Italy + Beijing:', model.similarity('ปักกิ่ง', 'อิตาลี'))

print('China + Rome:', model.similarity('โรม','จีน'))

print('China + Italy:', model.similarity('อิตาลี','จีน'))

China + Beijing: 0.3135956

Italy + Rome: 0.42819628

Beijing + Rome: 0.27347285

Italy + Beijing: 0.17900795

China + Rome: 0.02666693

China + Italy: 0.24352394

[25]:

sample_words = "ปักกิ่ง จีน โรม อิตาลี โตเกียว ญี่ปุ่น".split()

sample_idx = []

for word in sample_words:

sample_idx.append(labels.index(word))

sample_plot = thai2plot[sample_idx]

plot_with_labels(sample_plot,sample_words)

Spellchecking

Originally contributed by Sakares ATV, adapted from Kaggle Spell Checker using Word2vec by CPMP.

[26]:

words = model.index2word

w_rank = {}

for i,word in enumerate(words):

w_rank[word] = i

WORDS = w_rank

[27]:

thai_letters = 'กขฃคฅฆงจฉชซฌญฎฏฐฑฒณดตถทธนบปผฝพฟภมยรฤฤๅลฦฦๅวศษสหฬอฮะัาำิีึืุูเแโใไ็่้๊๋์'

def words(text): return re.findall(r'\w+', text.lower())

def P(word):

"Probability of `word`."

# use inverse of rank as proxy

# returns 0 if the word isn't in the dictionary

return - WORDS.get(word, 0)

def correction(word):

"Most probable spelling correction for word."

return max(candidates(word), key=P)

def candidates(word):

"Generate possible spelling corrections for word."

return (known([word]) or known(edits1(word)) or known(edits2(word)) or [word])

def known(words):

"The subset of `words` that appear in the dictionary of WORDS."

return set(w for w in words if w in WORDS)

def edits1(word):

"All edits that are one edit away from `word`."

letters = thai_letters

splits = [(word[:i], word[i:]) for i in range(len(word) + 1)]

deletes = [L + R[1:] for L, R in splits if R]

transposes = [L + R[1] + R[0] + R[2:] for L, R in splits if len(R)>1]

replaces = [L + c + R[1:] for L, R in splits if R for c in letters]

inserts = [L + c + R for L, R in splits for c in letters]

return set(deletes + transposes + replaces + inserts)

def edits2(word):

"All edits that are two edits away from `word`."

return (e2 for e1 in edits1(word) for e2 in edits1(e1))

[28]:

correction('พัดนา')

[28]:

'พัฒนา'

[29]:

correction('ขริง')

[29]:

'จริง'

[30]:

correction('จย้า')

[30]:

'จ้า'

[31]:

correction('นะค่ะ')

[31]:

'นะคะ'

[ ]: